A future model of AirPods may be able to detect when they are not properly seated in a user’s ear using ultrasonic ranging, and either alert the wearer, or reroute the audio to another device.

You might take it for granted that the sound will be poor if your AirPods Pro are not positioned correctly in your ear. Apple, though, wants your AirPods to alert you to this fact — and potentially to determine whether you actually want to listen to another device.

It’s part of Apple’s aim of creating an ecosystem where you don’t have to think about switching devices on or off, the devices just respond to what you want. We already have that with how AirPods Pro automatically switch from your iPhone to your iPad, even if you don’t always want them to.

“Ultrasonic proximity sensors, and related systems and methods,” is a newly-granted patent that wants to interpret when you want to listen to your AirPods, and when you want to listen to your iPhone’s speaker. And if you’re going to use your AirPods, it’s going to make sure you wear them properly for the best audio experience.

“Perceived sound quality and other measures of performance for an audio accessory can vary in correspondence with a local environment in which the audio accessory is placed,” says Apple. “For example, perceived sound quality can deteriorate if an in-ear earphone is not well-seated in a user’s ear.”

The patent is chiefly concerned with how the AirPods, or similar devices, could make this determination. It proposes that it could done by the use of an “ultrasonic proximity sensor” which “can determine one of more characteristics of a local environment.”

That local environment can be the room a user is standing in, or equally it can be their ear drum. Specifically, the AirPods could “emit an ultrasonic signal into a local environment and can receive an ultrasonic signal” back again.

“From a measure of correlation between the emitted and the received signals, the sensor can classify the local environment,” continues the patent. “By way of example, such a sensor can assess whether an in-ear earphone is positioned in a user’s ear.”

With one exception, the rest of the patent is not especially concerned with what the AirPods could do with this information. However, knowing that they are not fitted correctly, they could at least issue a notification to the user’s iPhone, or perhaps bleep in the ear.

Potentially, they could also perform some alteration on the audio being played. That could perhaps include compensating to give a proper stereo mix even when one AirPod is a little out of alignment in the ear.

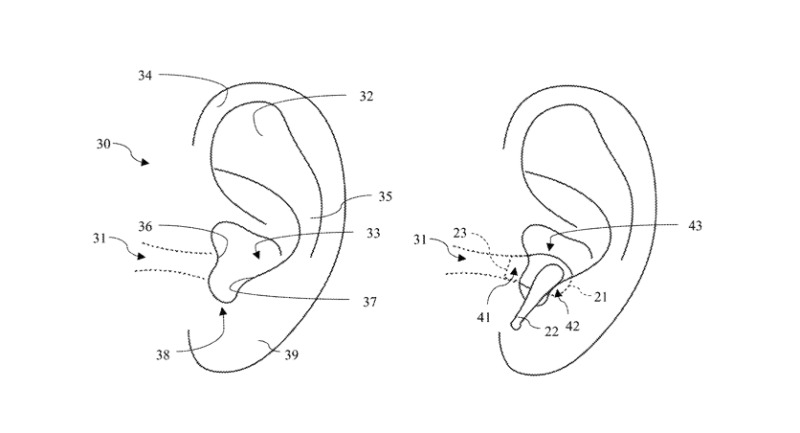

Detail from the patent showing how an ultrasonic signal could determine whether AirPods are seated correctly in a user’s ear

The next concern of the patent is really about determining why the user hasn’t put the AirPods in properly. If it’s a mistake or they’ve worked loose, a notification could be needed.

However, if it’s because the user actually wants to listen to something else, the patent wants the AirPods to figure that out and shut off audio to the earpods.

“[AirPods could] redirect the audio signal to another playback device responsive to a determination by the sensor that the earphone is not in the user’s ear,” says the patent.

So you could be listening to music coming from your iPhone, via AirPods. Then if you take those AirPods out, the music might instead automatically play from a nearby HomePod.

This patent is credited to Charles L. Greenlee. His previous work includes a related patent for a different ultrasonic sensor, also for Apple.

Apple has repeatedly investigated the use of ultrasonic sensors for a wide range of applications. This example of AirPods telling an iPhone to reroute audio relates to prior research into how devices could use ultrasonic pings to determine how near other devices are.

Recently, it was revealed that Apple has been looking at using ultrasonic sensors as part of user authentication. In future, Siri may use multiple technologies, including ultrasonic, to identify when different users are speaking.

Then the forthcoming “Apple Glass” may use ultrasonic sensors to determine where it physically is within an environment.

Note that Apple is granted very many patents and not all of them will result in products, or features in those products. A similar patent regarding AirPods Max, for instance, suggested that a U1 chip would detect when which way around the headphones were worn. As yet, Apple has not introduced that feature.